Data for improvement

When testing your change ideas with PDSA cycles, you need to start collecting data to be able to determine if the change made has resulted in an improvement. This data will be collected in 'real time' rather than retrospectively.

It is likely most of this data will be quantitative but qualitative data can be equally informative.

Refer to the following pages for capturing data: Surgical Antibiotic Prophylaxis fact sheets and IV to Oral Antibiotic Switch measurement strategy.

What is a Family of Measures?

One measure alone is insufficient to determine if improvement has occurred. You are advised to include one or two measures from each of the following three categories.

It is important to define the numerator and denominator and provide an operational definition for each measure to ensure data consistency (see example on outcome measures).

Outcome measures are closely aligned with your aim statement or the overall impact you are trying to achieve. They relate to how the overall process or system is performing, the end result.

Example: Percentage of eligible patients on IV antibiotics that are stopped or switched to oral therapy within 24 hours (target ≥ 95%).

- Numerator: Number of patients changed to oral therapy within 24 hours.

- Denominator: Number of eligible patients meeting the specific criteria.

- Operational definitions: "Eligible patients" are defined as those that meet "the criteria" for a safe and appropriate switch to oral antibiotic therapy according to guidelines. The criteria for inclusion are:

- clinically stable

- able to tolerate oral medication

- availability of an appropriate oral antibiotic

- patient likely to be adherent with oral therapy

- patient/carer agrees with the plan.

Process measures are the parts or steps in the process performing as planned. They are logically linked to achieve the intended outcome or aim.

Example: Median time taken to switch eligible patients to oral antibiotics.

Balancing measures look at the system from different directions or dimensions. They determine whether changes designed to improve one part of the system are causing new problems in another part of the system.

Example: The number of patients recommenced on IV antibiotics within 48 hours after oral switch.

The outcome, process and balancing measures provided are examples and can be adapted to your project and context.

What to consider before collecting data?

Think critically about the data you collect, how much, where to record and who can assist.

Before commencing PDSA cycles, you should:

- Review any baseline or historical data on performance of the process to be improved

- Consider the need to collect baseline data for the measures in order to determine the impact of the project

- Agree upon what should be measured – this includes the who, when, where and how the data will be collected for each measure.

- Determine the most efficient way to access and collect the data

- Consider how useful the data will be and how you will present it (don't collect unnecessary data that won't be used)

- Decide where to record data and how it will be accessed by the team (for example, spreadsheet, QIDS - preferred)

- Consider assigning responsibility to individual team members for data collection for each measure

- Make sure to speak with staff and patients to hear about their experiences whilst you are testing

- You will still need to continue collecting data after the project to check that the improvements are sustained.

The key to data collection is not quantity. Rather than collecting a big sample size, you want to make sure the data is project specific and collected continuously so it is meaningful to present.

You need to make sure to collect enough data to be able to understand if the changes you are making are resulting in an improvement – too little data and you won't be able to see improvement and too much is an over-investment of time and resources.

As a minimum it is recommended you collect between five to 10 data points each week (for example, collect data on five to 10 patients).

This will vary depending on the size of your health service and the frequency of the problem. Regardless, it is recommended that the data you collect is either consecutive (for example, the first five patients) or random.

Speak to your local quality improvement advisor about how much data to collect.

Contact data experts or colleagues who can advise you on the type of data to collect and how much to collect (they may already have the data you need):

- Health information data team

- ICT/eMeds team

- Local clinical governance/patient safety team

- Medical records

- Pharmacy department

- Executive Sponsor.

How do you make sense of and present your data?

Once data has been collected and entered in a spreadsheet or QIDS, you need to interpret the data in a meaningful way to determine if an improvement has occurred.

QIDS has the functionality for you to easily build different charts suitable for your improvement project.

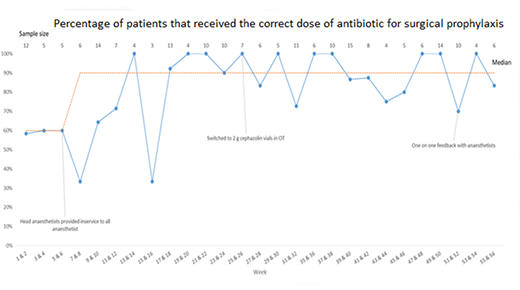

Run charts are line graphs showing data over time. Run charts are an effective tool to tell the project story and communicate the project's achievements with stakeholders.

Run charts illustrate what progress has occurred, what impact the changes are having and ultimately, if improvement is happening. Including annotations in your run chart will help to show when change ideas have been tested and may be associated with an improvement.

There are specific rules to interpreting run charts which can be found via the CEC Academy webpages. Your local QI advisor may be able to assist with the display and analysis of data.

There are several different charts (for example, Histogram, SPC Chart, etc) which can be used to present your data. Visit the CEC Academy webpages for more information.

Determining if improvement has really happened and if it is lasting requires observing patterns over time. Probability-based rules are helpful to detect non-random evidence of change.

For more information on types of data, minimum data point and the probability-based rules visit the CEC Academy webpages. It is recommended that you contact your local QI advisor for assistance.

For example, if you are using a run chart, an improvement is considered reliable when six consecutive data points are above 95%, that is, compliance with the new process implemented occurs 95% of the time.

Once the project team is confident that the change idea (or bundle of change ideas) is resulting in a reliable improvement, the project team can start forming project evaluation.

Refer to the 5x5 Antimicrobial Audit pilot project evaluation page as an example.